Publish Processed Data to the Data Portal#

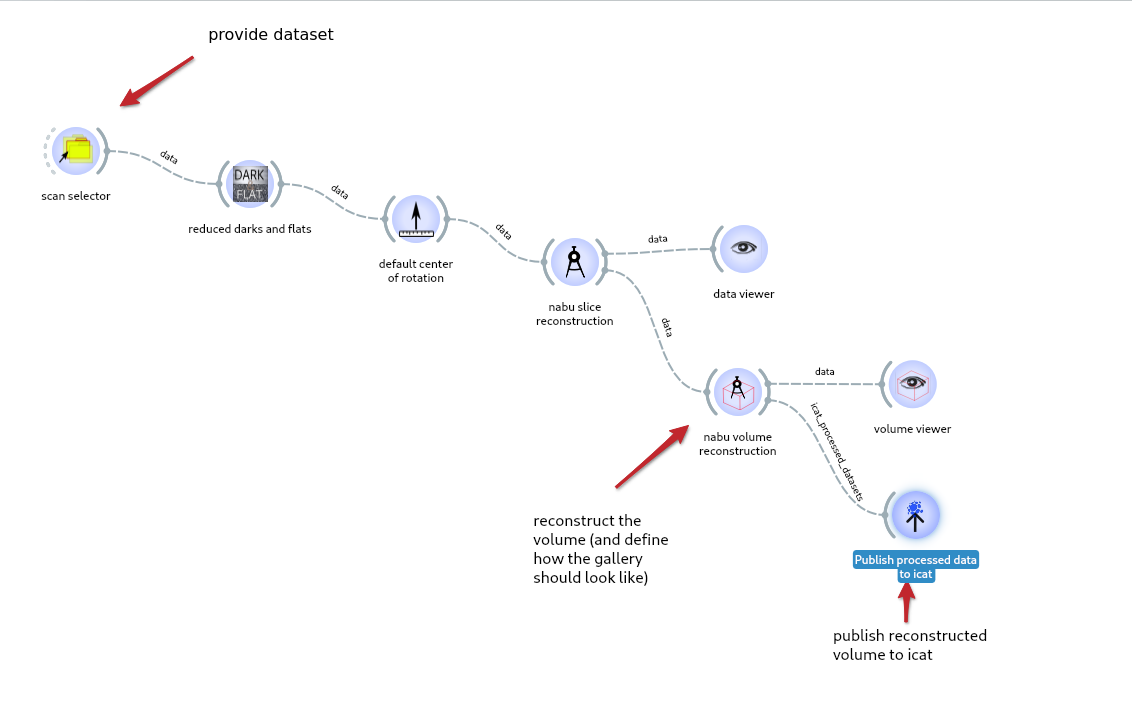

Publishing from Tomwer#

Since Tomwer 1.4, some tasks can generate a data_portal_processed_data output. This output can be linked to the Data Portal Publishing task and publish processed data to ICAT.

At the moment, only the nabu volume reconstruction task can create such an output.

An example workflow using the three widgets is available in Help -> Example -> “Reconstruction of a Volume and Publication to the (ESRF) Data Portal”.

Warning

The ‘data portal processed dataset’ (e.g., ‘reconstructed_volumes’…) must have the “PROCESSED_DATA” folder as its parent, otherwise, publication will not occur.

All necessary information required to publish the processed dataset should be retrieved automatically (e.g., dataset, beamline…). In case of failure, you can provide them manually from the Data Portal Publishing options -> advanced.

All necessary metadata should be retrieved from the .nx file (e.g., dataset, beamline…). If this fails, the user can still manually provide them.

Note

To ensure a clear separation between processing and publishing to the data portal, a “volume reconstruction” folder has been added for volume reconstruction.

Publishing from CLI#

Publication to the data portal can also be done from the Command Line Interface (CLI) using the pyicat-plus project.

The software is embedded in Tomwer, so you can access it within the same Python environment.

To store processed data, use a command like:

icat-store-processed --beamline id00 \

--proposal id002207 \

--path /data/visitor/path/to/processed/data \

--dataset testproc \

--sample mysample \

--raw /data/visitor/path/to/dataset1

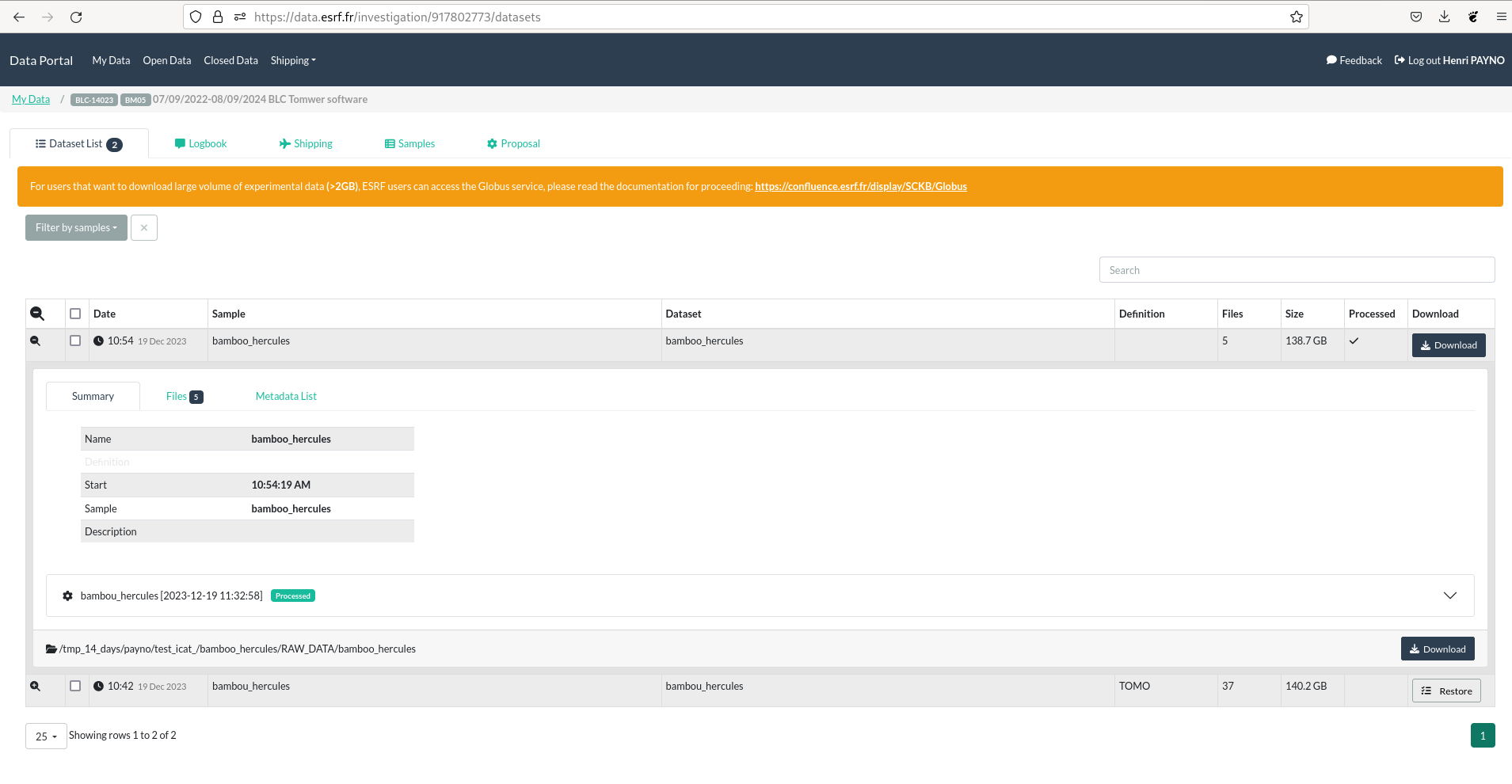

data.esrf.fr#

Once your dataset has been published, you should be able to retrieve it from https://data.esrf.fr/.

The different processed datasets should be linked to the raw data.

The raw data publication is handled automatically by Bliss.