Architectural definition

This addresses the architectural aspects of the tomography software developments for the EBS.

Here we introduce the most important terms and concepts used throughout the document.

Data definitions

- Raw data: detector readings → raw images or photon counts per energy channel coming out of the detectors

- Parameters : information about the experiment that is needed to process the data (e.g. rotation angle of a certain image, area detector pixel-size, etc)

- Data : set formed by the union of "raw data" and "parameters"

- Metadata : the information about the experiment that is NOT needed to process the data (e.g. temperature of the sample → processing not equal to analysis/interpretation)

Processing definitions

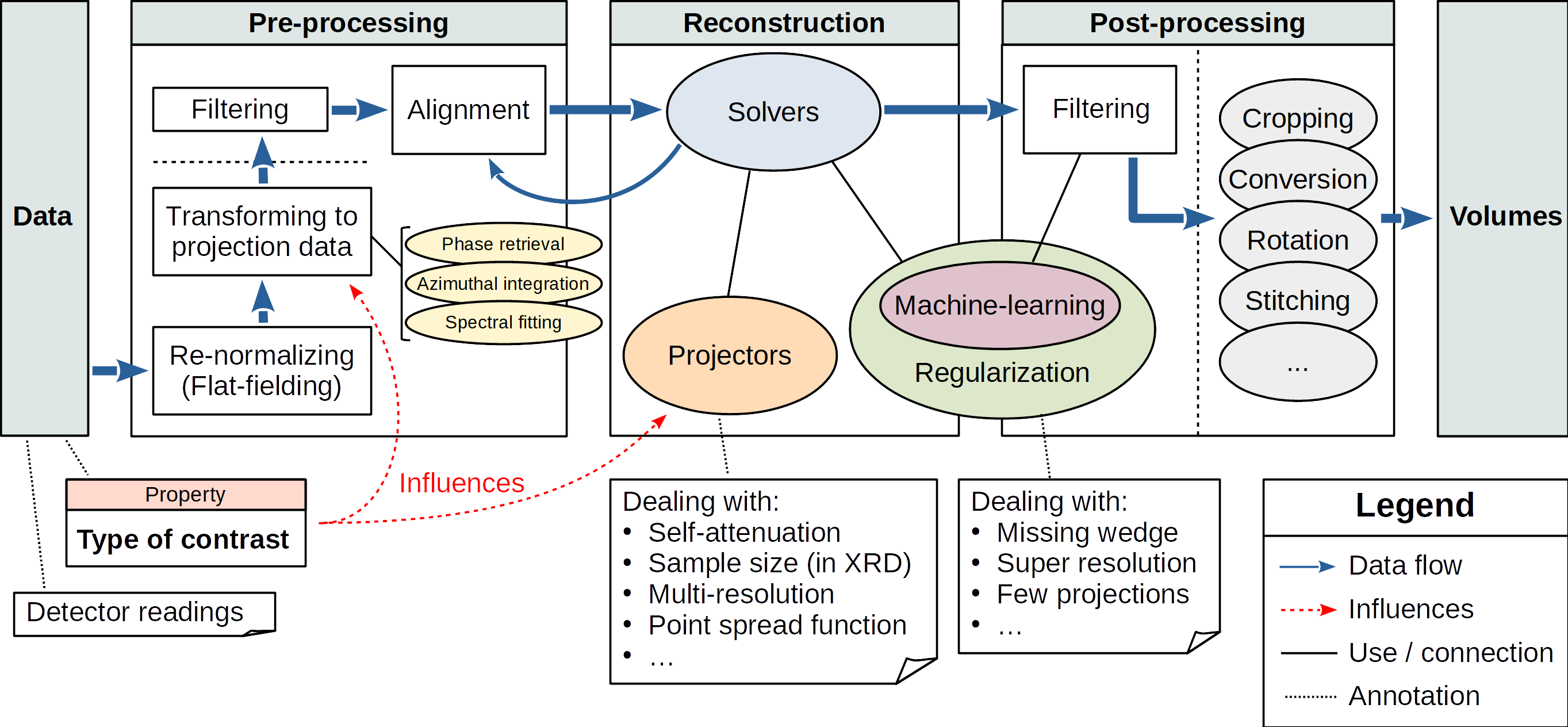

- Tomographic data processing: transformation of the raw data (detector readings) into volumes, representing one or multiple properties of the irradiated sample. The processing is divided into three macro blocks: pre-processing, reconstruction, and post-processing.

- Pre-processing : transformation of raw data in projection data. The nature of the preprocessing is very technique dependent. For example, raw data treatment and filtering, which includes: denoising, some techniques for ring artefact corrections, rotation center finding, sample drift correction, phase retrieval, extracting material phases from diffraction patterns, elemental fitting of fluorescence spectra, etc.

- Reconstruction : tomographic inversion of the projection data. This can be performed using various algorithms, including Filtered Back-Projection (FBP) and algebraic techniques. This last group includes: Maximum Likelihood Expectation Maximisation (MLEM), Simultaneous Iterative Reconstruction Technique (SIRT), Fast Iterative Shrinkage-Thresholding Algorithm (FISTA), and advanced solvers like Chambolle-Pock (CP), and Douglas-Ratchford (DR). Advanced iterative reconstruction algorithms (generic solvers) allow the use of various data fitting terms (modelling of the detection noise), and the addition of constraints to the reconstruction problem. The said constraints enforce prior knowledge about the reconstruction morphology. More recently, machine-learning (ML) based techniques have been gaining a lot of traction, and shown to be able to deliver significant improvements over previous approaches.

- Post-processing : transformation of the final volumes, that can include two types of operations: filtering and volume manipulation. The former includes standard artefact removal techniques (e.g. ring artefact corrections), and novel methods based on ML. The latter includes: binning, cropping, rotation, stitching, and data-format conversion.

- Regularization : while the exact definition is rather broad, we will refer here to it as the techniques and algorithms used to extract the desired information from the data. In particular, it gains importance when the data is incomplete or is affected by high levels of noise. ML and prior knowledge constraints are examples of technologies belonging to this field.

Figure 1 summarizes all the main actors and aspects in the tomographic data processing.

Technical definitions

- Pipeline or workflow: definition of successive processing operations that turn raw data into reconstructed and filtered volumes. Each experimental technique requires a different pipeline. A software like TomWer (Tomography Workflows for ESRF) allows to define a pipeline, from building blocks. The same could be achieved with simple custom made scripts.

- Framework : a suite of essential building blocks, which can be assembled to create a pipeline.

- Workload distribution : act of distributing the workload of a certain processing block to a group of node or local resources (e.g. CPU cores or GPUs)

- Stateful as opposed to stateless computation: the former indicates processing blocks that are aware of the processing context, while the latter "blindly" operates on the data. In a stateful computation paradigm, it is both possible to pass around processing contexts, and to keep track of the work done. This results in the ability to more efficiently use (and re-use) resources, and to allow resuming computation on interruption.

- Waterfall development (WD): most common development practice that supposes a somewhat long period of incubation. During the incubation period, the developers research the topic, implement the code using stable interfaces, and test it, with extensive documentation. WD works well for long projects in established software companies that do not experience much competition in their product space, or for projects that outright privilege quality over features. WD is not appropriate for the development of cutting edge features in a much more dynamic and featurecompetitive environment. Notable software examples of WD use cases are: healthcare management software, operating systems, office suites, etc.

- Agile development (AD): opposed to WD, it supposes a shorter release and development cycle (normally between 4 and 6 weeks), with a larger number of (smaller) iterations on the same development task. This allows to have better feedback from users/customers (and fine-tune the software for them) before stabilization, and the newest features available from an early stage. AD development is suitable for quickly evolving fields or feature-competitive environments. Notable software examples of AD use cases are: video players, browsers, social media apps, etc.